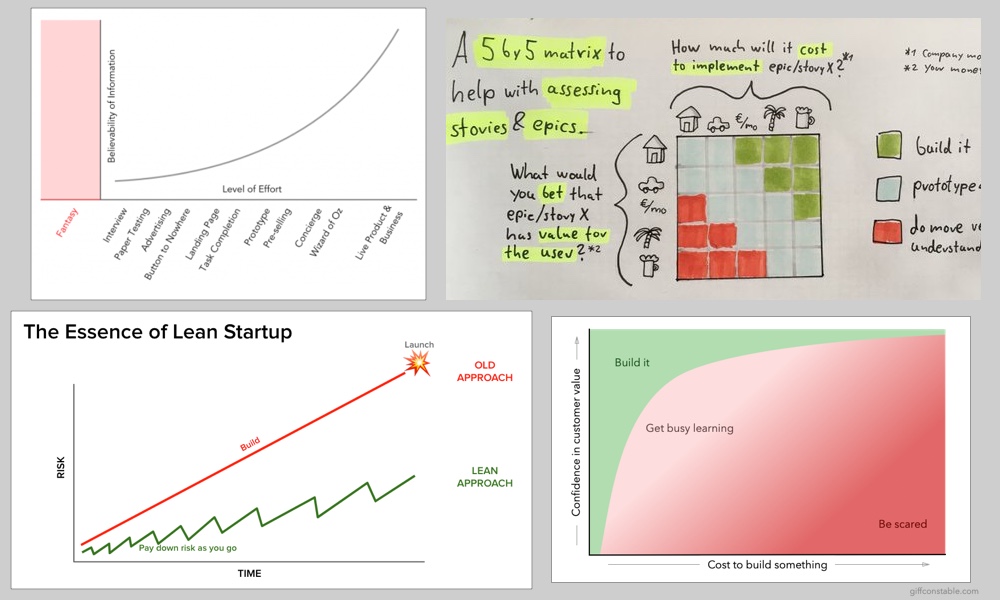

In my two books on testing new business ideas, I have a diagram called the “truth curve”. I often get credit for a variation that my friends Jeff Gothelf and Jeff Patton created, but their diagram makes a different, equally valuable point. I’m going to take the liberty of calling their graph the “build curve”. Let’s look at them both.

The Truth Curve

The truth curve asks, “can I believe what I am learning?”

When you are in your own head “inside the building”, you are in fantasy land. However, real truth is in the upper right: your product is live and people are buying or not buying, using or not using, retaining or not retaining. Your data is your proof. However, you can’t wait that long to test your ideas or you will make too many mistakes, waste too much time, and have needlessly costly failures.

That concept is elegantly shown in a visualization Janice Fraser sketched for me on a napkin a decade ago.

If you look at the Truth Curve, we start by using low-lift tactics like interviewing customers and running small experiments, and we work our way to bigger, more experiential experiments where you study someone’s behavior with some kind of simulation of your intended experience.

We start with lightweight methods because they are quick to bring us insights, but the believability of those methods is weaker. You can’t validate an idea with those methods. You need to apply your judgement and retain a healthy skepticism about what you are learning and for your idea.

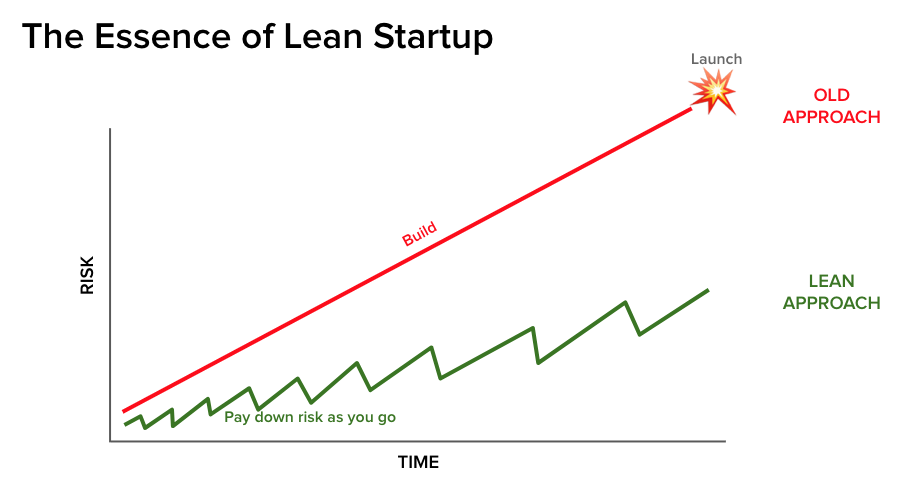

The Build Curve

Now let’s turn to Jeff & Jeff’s diagram. Their question is an equally valid one, but different: “should we go ahead and build this or should we learn more first?”

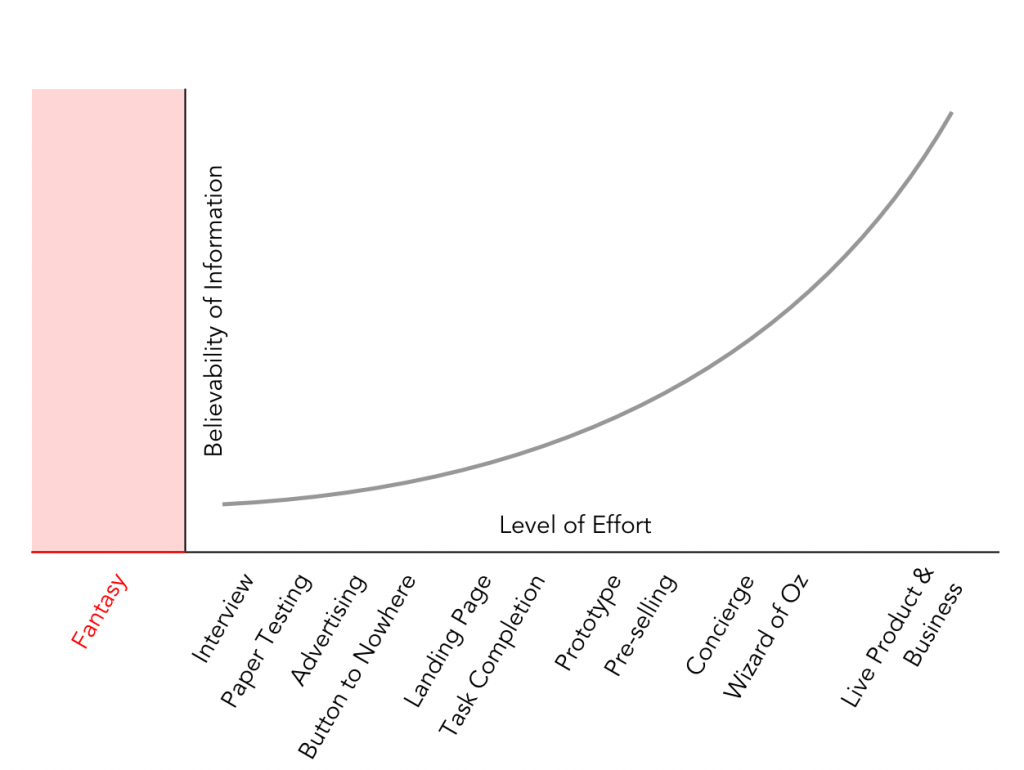

Here’s a fun visualization of their concept by Hias Wrba, who drew this after being in one of Jeff & Jeff’s workshops:

They are plotting confidence in the value for the user (“how much would you bet this is right?”) against the cost to build that idea. If you have enough confidence to bet your house and it’s also pretty cheap to build, that implies you should go ahead and build. Conversely, if you’re not willing to bet much and it’s expensive to build, you need to learn and de-risk the idea more. Ideas in the middle deserve skepticism rather than blind faith, so they also should run a gauntlet of more customer discovery and experimentation.

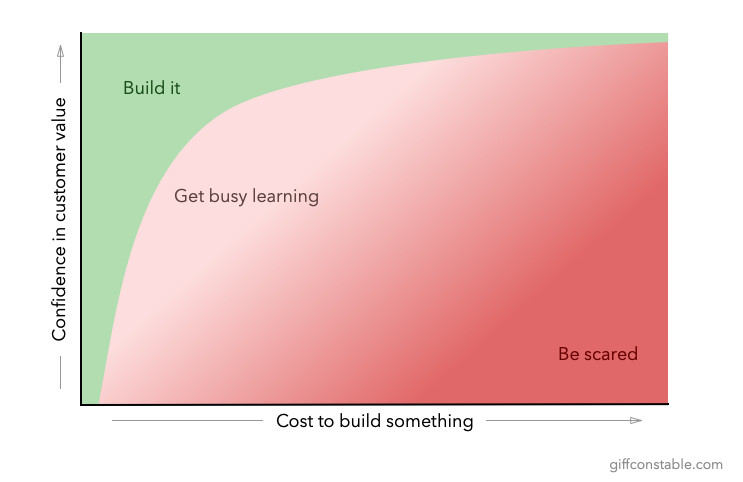

Here’s my own variation a little more akin to the “truth curve”:

As you can see, I do not think this concept is linear nor equally balanced as simplified for the 5×5 matrix, but I suspect the matrix is easier to absorb. However, after almost 30 years in software, I start to question the validity of my confidence very quickly as cost goes up (and I mean cost in the widest of terms — money, people, time, focus, etc).

Of course, confidence there is value for the user is not enough, either. Any product idea also has to align to your company’s strategy and deliver value (ROI) to the business as well.

A Side Story

When I first got to Meetup as CPO, the only real “experimentation” that I saw being done was split-testing after features were finished. For big ideas, that’s way too far down the funnel — that’s too much investment in “building” before learning (see “essence of lean” diagram above). While we had excellent UX research and data science teams, those inputs weren’t enough on their own to make big decisions with confidence.

I encouraged the teams to do more “hacky”, more manual experiments far earlier in their process. But then some teams swung too far, running experiments for smaller, no-brainer ideas. Eventually we got to the point that the teams could hold all of the concepts diagrammed above comfortably in their minds. They could: 1) get data early but be aware of the limits of the data they were getting; 2) use common sense in knowing when to research a thing, test a thing, or just build a thing.

This stuff takes time to master. In over a decade as, to a certain extent, a leader in the lean startup community, I’ve never seen anyone be awesome at this stuff overnight. It takes practice to identify risks and then design a smart, efficient research and experiment path. If you want help, I hope my books Talking with Humans and Testing with Humans can be useful.

Is there a higher-level lesson here?

Zooming out, my key lesson is that we always need to be looking at our actions with a certain amount of skepticism even as we drive forward with action. Is this experiment worth running? Is this feature worth building? Is this startup idea worth years of my life?

Addendum

Part of the fun of Twitter is the exchange of ideas, when people aren’t being mean to each other. I really like this response from Phil Bergdorf when I tweeted this post: