How do you choose when to do an experiment versus an interview? I received a really interesting question last week at the Lean Startup Conference. At the event, I ran two workshops—one on customer discovery (the topic of Talking to Humans) and one on experiments (the topic of Testing with Humans). The asker was trying to understand a shopper’s experience as the person went through certain retail environments. She asked me how she could uncover that information in a sit-down interview. I said I wouldn’t even try. Instead, I would walk around the stores with people and hear their reactions and decisions in the moment. In other words, I would do ethnographic-style research.

But behind the question is a bigger one: how do you choose your tactic? And I might extend it to: when do you run qualitative research versus experiments?

You want to do interviews and experiments in parallel — they are complementary — but you apply them in different ways and for different purposes. A simple rule of thumb is that interviews give you the greatest insights and experiments give you the greatest proof. Or another way of phrasing it: experiments give you evidence whether something will happen; interviews help you understand why.

Interviews give you the greatest insights. Experiments give you the greatest proof.

Interviews are relatively fast and cheap to run. They don’t require you being present at the moment of “experience”, which can be very hard to pull off in many contexts. They do require you to dig deeper than surface-level answers. Done right, you can really understand what is going on in someone’s head. But there is a downside: human memories are faulty. We often don’t really know (or accept) our own underlying motivations and behavior. As an interviewer, you can’t always take things as literal truth, but you can get a read on how people think they experienced something or decided something.

On the other hand, with an experiment, you create a structured, constrained test where someone has to do something, and then you measure whether they do it. The believability of the results will depend on how you designed the experiment. Furthermore, you don’t know why they took the actions they did unless you talk to them.

With every tactic, you need to ask yourself about the believability of what you are learning. You want to ask yourself whether you can get a better signal in another way, or if you can get an equivalent signal in a cheaper/faster way.

Examples

- If I wanted to understand what went into a multi-million dollar purchasing decision from two years ago, I would do that in an interview. It would be inefficient to wait for the next time a similar purchase came about, not to mention difficult to get into those decision meetings.

- If I wanted to understand how someone makes decisions as they walk through a specific physical environment (say, a Sephora store), I would want to see them in that actual environment. I might first observe them in the store and talk to them after, or I might run something more akin to a concierge, where I acted like a personal shopper, engaging with them in the store the entire time.

- If I want to know whether someone would pay for a product, I would run experiments where I try to sell it to them for a set amount. This is because I don’t really believe in the answers you get when you ask, “How much would you pay?”

- If I wanted to see how much general interest there was in an idea, I might do a mixture of tactics. I might interview people to get a sense for their frustration level with the problem, and also investigate if they had tried to solve the problem before with money or effort. I might also run ads or put up a landing page or order form experiment to measure conversion rates. The former would give me wonderful qualitative insights. The latter would be easier to run at scale. Doing both would give me two different vectors of information into the question.

The more tactics and variations you know, the better. You want a lot of tools in your toolbox. To overuse the metaphor, you do not want to be that person who only has a hammer, so treats everything like a nail. That means trying different approaches on for size where you think they might make sense.

Here are some of the experiment archetypes we discuss in more detail in Testing with Humans:

- Landing Page: where you create a simple web page (or website) that expresses your value proposition and gives the visitor the ability to express their interest with some sort of call to action.

- Advertising: paying money to put your value proposition in front of a relevant audience in the form of an ad to see whether people respond/convert.

- Promotional Material: a variation of an advertising test where you produce some sort of online or offline promotional material to test reactions or generate demand.

- Pre-selling (including crowd-funding): where you try to book orders before you have built the product.

- Paper Testing: applying primarily to software and information (data, analysis, media, etc) products, paper tests are where you mock up an example of an application user interface or report and put them in front of a potential customer.

- Product Prototype: a working version of your product or experience that is built for learning and fast iteration, rather than for robustness or scale.

- Wizard of Oz: where the customer thinks they are interfacing with a real product (or feature), but where your team provides the service in a manual way, hidden behind the scenes (hence the name).

- Concierge: where you manually, and overtly, act as the product you eventually want to build (unlike a Wizard of Oz where people are behind the scenes).

- Pilots: where you put an early version of your product in the hands of your customers, but you scale down the size of the implementation and put a finite time period on the project.

- Usability: where you check to see if someone can effectively use a product without getting stuck or blocked.

The more you know, the easier it is to choose the right tactic for the right problem, or create variations to learn what you need to learn.

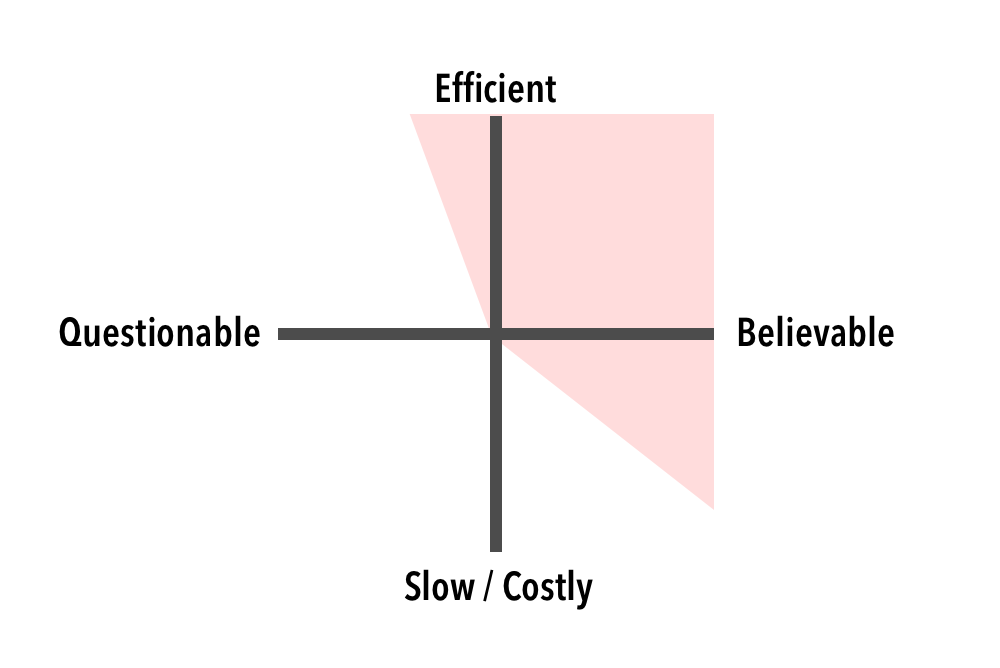

The truth curve that I reference in my books can be reframed as a 2×2 quadrant that pits the believability of information against the speed and cost of that information. In the below image, I sketched an “acceptable zone” in red where you want to spend your time.

You obviously don’t want to do anything too questionable or too costly. Ideally, you choose a tactic with both high speed and high believability, but that’s not always the real world. Sometimes tactics do get big and costly, but one should avoid it and it’s only worth it if you are tackling a really big risk and you think you’ll get believable intel. It’s never a good idea to gather excessively questionable data, even if the methods are efficient, because then you can’t make informed decisions.

Tools in the Toolbox

If it’s good to learn as many variations of both research and experiments, how can you do so? For a quick, practical primer on interviewing your market, read Talking to Humans. In the sequel, Testing with Humans, we have a whole chapter on experiment archetypes. There are also many blog posts from folks in the lean startup community.

Going further, I highly recommend looking across different disciplines. Entrepreneurs should try to learn from the design community, and designers should try to learn from the lean startup community.

If you’re interested in learning more from the design community, check out Erika Hall’s Just Enough Research and the many books from Rosenfeld Media (in particular authors like Tomer Sharon and Steve Portigal). You can also get good ideas from the growth hacking community, but just be clear about whether your goal is to learn something or to drive a number.