First Round Capital has a wonderful online network, basically a white-labelled quora, for people at their portfolio companies. I answer questions now and then. Here are some of the Q’s I have answered over the last year or two:

What are best practices for including and leveraging the design team in your product development process?

I boil it down to two key essentials, and I think a lot of modern software teams are moving to this model:

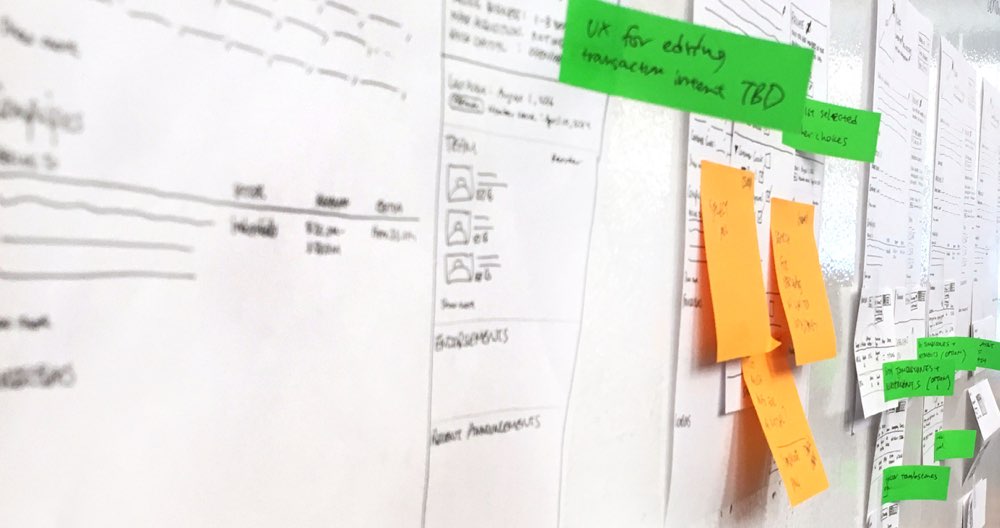

1. Avoid running the product design team as a service center. Instead I believe in embedding dedicated designers on small cross-functional teams. As you scale up, you might find that it makes sense to centralize an additional research capability, but that still doesn’t preclude dedicated, embedded design on teams.

2. Hire designers who think about design with a capital-D, i.e. they care as much about understanding and solving the customer’s goals as they do visuals and usability.

As your teams are running to hit whatever goal that has been set, ideally they are doing a mix of research and experimentation as well as building, shipping and measuring. Designers bring a different perspective to the problem/solution process from PMs and engineers. It’s powerful to have that in the mix and leads to better decisions. So essentially, get design involved from the start right through final validation that a feature actually worked.

Note: Even though, in this model, designers and PMs are distributed across teams, they still want to report to a senior member of their craft that can help drive excellence across all the teams.

Who runs user testing at your startup? What have you seen work or not?

My belief is that user testing and user research is a constant thing across the lifecycle of all product work, which means each team is responsible for doing it. One also needs to be practical — the shape and cadence of research depends on the nature and risks of the idea or problem/goal/feature/strategy you are investigating.

So I’m not a fan of centralized usability or research (or design service centers) at startups. Market feedback needs to be visceral/personal, very “first person” to the team working on the problem/outcome. It’s important to also figure out how to get engineers exposed to the process and feedback.

One exception to this is recruiting, particularly for consumer products. Having a centralized responsibility for ensuring 1. a constant flow of customers/users and 2. smooth logistics both for the users as well as which team gets to talk to whom, can bring extra efficiency to the process. Meetup, who took having a constant cadence of user feedback to the next level, took this approach to good effect.

I do think this starts to change as you scale to a very large product team. Building a research competency can ensure better training of your product team, a better interviewee experience, and better externalization of “learnings” across the org. But even in this situation, the product teams themselves need to be fierce about maintaining their own pipeline of first-person knowledge.

What quantitative metrics can help assess product-market fit?

Sean Ellis asks customers how disappointed they would be to no longer have your product. Working across a lot of different startups, he came up with the heuristic that you want to see more than 40% be very disappointed.

David Skok also recently did a long post on this topic.

The hard truth is that an early semblance of PM-fit can often be a mirage simply because of the dynamics of the technology adoption curve and the fact that customer types are changing as you try to scale. It’s why the book Crossing the Chasm is still relevant so many years later. While I really like Sean’s framework, and actively put it into practice, I think every startup has to zoom into its own context and its own key indicators of scalable, repeatable growth and business model success. Enterprise (B2B), consumer (B2C) and marketplaces all have different characteristics. It can be brutally hard in the early stage to uncover your consistent and authentic predictive metrics, but worth the effort.

One of the biggest mistakes when it comes to examining PM-fit is focusing excessively on growth numbers rather than retention/churn numbers. Startups are often good at goosing/driving growth, but the real question is whether the foundation is solid and scalable.

Given my startup is too small to need managers, what can I do to gain management experience now and position myself to be an obvious choice when we do grow to need managers?

Leadership is less about telling people what to do and more about helping a team be their best. You don’t need reporting lines to be able to practice and build a leadership state of mind. Some questions to ask yourself and work on:

- Are you raising the optimism and morale of the team?

- Are you actively helping to ensure shared focus and shared understanding across the team?

- Are you helping the team set smart goals?

- Are you thinking about ways to not only improve your own skills but the skills of people around you? most importantly, are you taking action on those ideas?

- Are you mentoring, collaborating, challenging, and inspiring the team to do better work? are you doing this in a way that does not come across as arrogant – meaning that you are a good listener and open to others’ ideas?

- When you interview job candidates, are you setting a really high bar? are you thinking about finding someone with new perspectives? are you thinking about how the person will fit in as a puzzle piece to lift up vs weight down the team?

- Are you thinking about the impact and connectivity of the team on and to the rest of the organization?

You should begin to lead through influence before you actually get direct reports.

How do you balance working on long-term larger projects with short-term quick fixes at your startup?

We currently operate outcome-oriented teams. We keep the teams focused on their goal (KPI) but do weave in critical/major bug fixes. If a small feature request comes in, we first ask if it would contribute in a meaningful way to the goal. If not, it likely goes on the backburner, but we will ask the question of whether there would be enough outsized lift for the amount of work involved that it would justify breaking the flow of the team. Making an exception is a judgement call, but one should always be open to exceptions as long as they don’t become the rule.

It might come out of the icebox in one of two ways:

1. a team gets assigned a new goal where the feature is aligned and worthwhile;

2. we do a “small but good improvements” sprint between major goal initiatives, just as we might do a focused bug-fix or tech-debt sprint. In any of those circumstances, you need to do a fierce prioritization effort, but it can be useful to steal a march here and there that let you stay focused the rest of the time.